Try Adsterra Earnings, it’s 100% Authentic to make money more and more.

Early last summer, a small group of senior leaders and responsible AI experts at Microsoft started using technology from OpenAI similar to what the world now knows as ChatGPT. Even for those who had worked closely with the developers of this technology at OpenAI since 2019, the most recent progress seemed remarkable. AI developments we had expected around 2033 would arrive in 2023 instead.

Looking back at the history of our industry, certain watershed years stand out. For example, internet usage exploded with the popularity of the browser in 1995, and smartphone growth accelerated in 2007 with the launch of the iPhone. It’s now likely that 2023 will mark a critical inflection point for artificial intelligence. The opportunities for people are huge. And the responsibilities for those of us who develop this technology are bigger still. We need to use this watershed year not just to launch new AI advances, but to responsibly and effectively address both the promises and perils that lie ahead.

The stakes are high. AI may well represent the most consequential technology advance of our lifetime. And while that’s saying a lot, there’s good reason to say it. Today’s cutting-edge AI is a powerful tool for advancing critical thinking and stimulating creative expression. It makes it possible not only to search for information but to seek answers to questions. It can help people uncover insights amid complex data and processes. It speeds up our ability to express what we learn more quickly. Perhaps most important, it’s going to do all these things better and better in the coming months and years.

I’ve had the opportunity for many months to use not only ChatGPT, but the internal AI services under development inside Microsoft. Every day, I find myself learning new ways to get the most from the technology and, even more important, thinking about the broader dimensions that will come from this new AI era. Questions abound.

For example, what will this change?

Over time, the short answer is almost everything. Because, like no technology before it, these AI advances augment humanity’s ability to think, reason, learn and express ourselves. In effect, the industrial revolution is now coming to knowledge work. And knowledge work is fundamental to everything.

This brings huge opportunities to better the world. AI will improve productivity and stimulate economic growth. It will reduce the drudgery in many jobs and, when used effectively, it will help people be more creative in their work and impactful in their lives. The ability to discover new insights in large data sets will drive new advances in medicine, new frontiers in science, new improvements in business, and new and stronger defenses for cyber and national security.

Will all the changes be good?

While I wish the answer were yes, of course that’s not the case. Like every technology before it, some people, communities and countries will turn this advance into both a tool and a weapon. Some unfortunately will use this technology to exploit the flaws in human nature, deliberately target people with false information, undermine democracy and explore new ways to advance the pursuit of evil. New technologies unfortunately typically bring out both the best and worst in people.

Perhaps more than anything, this creates a profound sense of responsibility. At one level, for all of us; and, at an even higher level, for those of us involved in the development and deployment of the technology itself.

There are days when I’m optimistic and moments when I’m pessimistic about how humanity will put AI to use. More than anything, we all need to be determined. We must enter this new era with enthusiasm for the promise, and yet with our eyes wide open and resolute in addressing the inevitable pitfalls that also lie ahead.

The good news is that we’re not starting from scratch.

At Microsoft, we’ve been working to build a responsible AI infrastructure since 2017. This has moved in tandem with similar work in the cybersecurity, privacy and digital safety spaces. It is connected to a larger enterprise risk management framework that has helped us to create the principles, policies, processes, tools and governance systems for responsible AI. Along the way, we have worked and learned together with the equally committed responsible AI experts at OpenAI.

Now we must recommit ourselves to this responsibility and call upon the past six years of work to do even more and move even faster. At both Microsoft and OpenAI, we recognize that the technology will keep evolving, and we are both committed to ongoing engagement and improvement.

The foundation for responsible AI

For six years, Microsoft has invested in a cross-company program to ensure that our AI systems are responsible by design. In 2017, we launched the Aether Committee with researchers, engineers and policy experts to focus on responsible AI issues and help craft the AI principles that we adopted in 2018. In 2019, we created the Office of Responsible AI to coordinate responsible AI governance and launched the first version of our Responsible AI Standard, a framework for translating our high-level principles into actionable guidance for our engineering teams. In 2021, we described the key building blocks to operationalize this program, including an expanded governance structure, training to equip our employees with new skills, and processes and tooling to support implementation. And, in 2022, we strengthened our Responsible AI Standard and took it to its second version. This sets out how we will build AI systems using practical approaches for identifying, measuring and mitigating harms ahead of time, and ensuring that controls are engineered into our systems from the outset.

Our learning from the design and implementation of our responsible AI program has been constant and critical. One of the first things we did in the summer of 2022 was to engage a multidisciplinary team to work with OpenAI, build on their existing research and assess how the latest technology would work without any additional safeguards applied to it. As with all AI systems, it’s important to approach product-building efforts with an initial baseline that provides a deep understanding of not just a technology’s capabilities, but its limitations. Together, we identified some well-known risks, such as the ability of a model to generate content that perpetuated stereotypes, as well as the technology’s capacity to fabricate convincing, yet factually incorrect, responses. As with any facet of life, the first key to solving a problem is to understand it.

With the benefit of these early insights, the experts in our responsible AI ecosystem took additional steps. Our researchers, policy experts and engineering teams joined forces to study the potential harms of the technology, build bespoke measurement pipelines and iterate on effective mitigation strategies. Much of this work was without precedent and some of it challenged our existing thinking. At both Microsoft and OpenAI, people made rapid progress. It reinforced to me the depth and breadth of expertise needed to advance the state-of-the-art on responsible AI, as well as the growing need for new norms, standards and laws.

Building upon this foundation

As we look to the future, we will do even more. As AI models continue to advance, we know we will need to address new and open research questions, close measurement gaps and design new practices, patterns and tools. We’ll approach the road ahead with humility and a commitment to listening, learning and improving every day.

But our own efforts and those of other like-minded organizations won’t be enough. This transformative moment for AI calls for a wider lens on the impacts of the technology – both positive and negative – and a much broader dialogue among stakeholders. We need to have wide-ranging and deep conversations and commit to joint action to define the guardrails for the future.

We believe we should focus on three key goals.

First, we must ensure that AI is built and used responsibly and ethically. History teaches us that transformative technologies like AI require new rules of the road. Proactive, self-regulatory efforts by responsible companies will help pave the way for these new laws, but we know that not all organizations will adopt responsible practices voluntarily. Countries and communities will need to use democratic law-making processes to engage in whole-of-society conversations about where the lines should be drawn to ensure that people have protection under the law. In our view, effective AI regulations should center on the highest risk applications and be outcomes-focused and durable in the face of rapidly advancing technologies and changing societal expectations. To spread the benefits of AI as broadly as possible, regulatory approaches around the globe will need to be interoperable and adaptive, just like AI itself.

Second, we must ensure that AI advances international competitiveness and national security. While we may wish it were otherwise, we need to acknowledge that we live in a fragmented world where technological superiority is core to international competitiveness and national security. AI is the next frontier of that competition. With the combination of OpenAI and Microsoft, and DeepMind within Google, the United States is well placed to maintain technological leadership. Others are already investing, and we should look to expand that footing among other nations committed to democratic values. But it’s also important to recognize that the third leading player in this next wave of AI is the Beijing Academy of Artificial Intelligence. And, just last week, China’s Baidu committed itself to an AI leadership role. The United States and democratic societies more broadly will need multiple and strong technology leaders to help advance AI, with broader public policy leadership on topics including data, AI supercomputing infrastructure and talent.

Third, we must ensure that AI serves society broadly, not narrowly. History has also shown that significant technological advances can outpace the ability of people and institutions to adapt. We need new initiatives to keep pace, so that workers can be empowered by AI, students can achieve better educational outcomes and individuals and organizations can enjoy fair and inclusive economic growth. Our most vulnerable groups, including children, will need more support than ever to thrive in an AI-powered world, and we must ensure that this next wave of technological innovation enhances people’s mental health and well-being, instead of gradually eroding it. Finally, AI must serve people and the planet. AI can play a pivotal role in helping address the climate crisis, including by analyzing environmental outcomes and advancing the development of clean energy technology while also accelerating the transition to clean electricity.

To meet this moment, we will expand our public policy efforts to support these goals. We are committed to forming new and deeper partnerships with civil society, academia, governments and industry. Working together, we all need to gain a more complete understanding of the concerns that must be addressed and the solutions that are likely to be the most promising. Now is the time to partner on the rules of the road for AI.

Finally, as I’ve found myself thinking about these issues in recent months, time and again my mind has returned to a few connecting thoughts.

First, these issues are too important to be left to technologists alone. And, equally, there’s no way to anticipate, much less address, these advances without involving tech companies in the process. More than ever, this work will require a big tent.

Second, the future of artificial intelligence requires a multidisciplinary approach. The tech sector was built by engineers. However, if AI is truly going to serve humanity, the future requires that we bring together computer and data scientists with people from every walk of life and every way of thinking. More than ever, technology needs people schooled in the humanities, social sciences and with more than an average dose of common sense.

Finally, and perhaps most important, humility will serve us better than self-confidence. There will be no shortage of people with opinions and predictions. Many will be worth considering. But I’ve often found myself thinking mostly about my favorite quotation from Walt Whitman – or Ted Lasso, depending on your preference.

“Be curious, not judgmental.”

We’re entering a new era. We need to learn together.

Tags: AI, artificial intelligence, ChatGPT, OpenAI, Responsible AI

Published By

Latest entries

allPost2025.01.31Pardoned Jan. 6 rioter sentenced to 10 years for fatal DWI crash

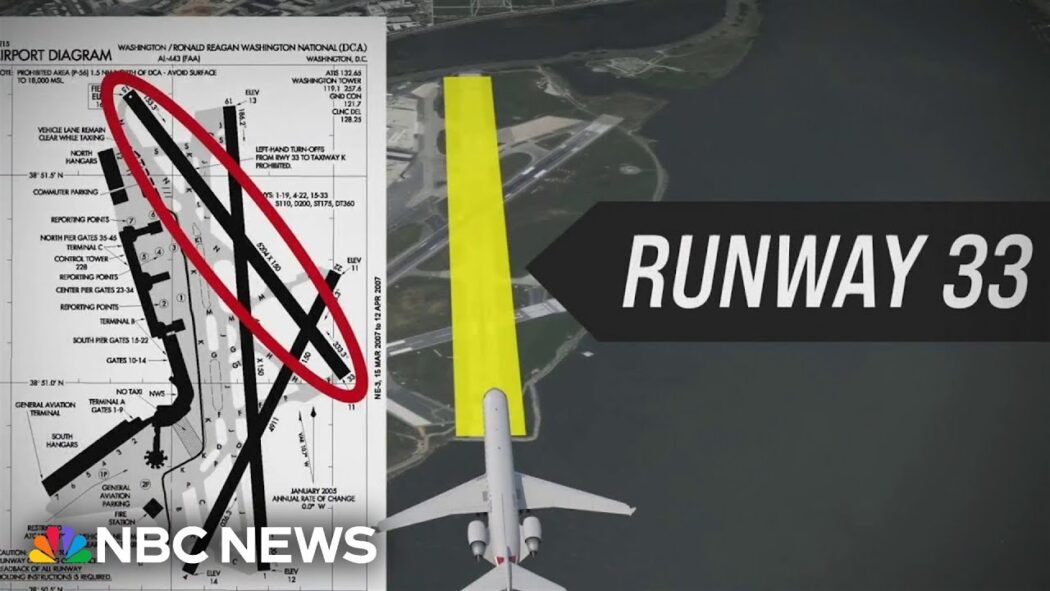

allPost2025.01.31Pardoned Jan. 6 rioter sentenced to 10 years for fatal DWI crash allPost2025.01.31After crash, questions about air traffic control staffing

allPost2025.01.31After crash, questions about air traffic control staffing allPost2025.01.3110-year-old grieves friends and coach on Potomac flight

allPost2025.01.3110-year-old grieves friends and coach on Potomac flight allPost2025.01.31‘It’s tragic’: Former figure skating Olympian reacts to skaters who died in crash

allPost2025.01.31‘It’s tragic’: Former figure skating Olympian reacts to skaters who died in crash

75 replies on “Meeting the AI moment: advancing the future through responsible AI”

Hello. fantastic job. I did not anticipate this. This is a splendid story. Thanks!

What Is Aizen Power? Aizen Power is presented as a distinctive dietary supplement with a singular focus on addressing the root cause of smaller phalluses

Real wonderful info can be found on blog.

Hi there, You have done an incredible job. I will definitely digg it and personally recommend to my friends. I’m confident they will be benefited from this web site.

whoah this blog is great i really like reading your articles. Keep up the great paintings! You already know, a lot of persons are searching round for this info, you can help them greatly.

Hi, Neat post. There’s an issue together with your website in internet explorer, may check this?K IE still is the market chief and a huge component of folks will miss your excellent writing because of this problem.

Sweet website , super design, rattling clean and use friendly.

Appreciate it for helping out, great information. “If at first you don’t succeed, find out if the loser gets anything.” by Bill Lyon.

What i don’t understood is in truth how you’re no longer really much more smartly-appreciated than you may be now. You’re very intelligent. You recognize thus significantly with regards to this matter, made me for my part consider it from so many numerous angles. Its like women and men don’t seem to be fascinated except it is something to do with Girl gaga! Your personal stuffs outstanding. Always maintain it up!

Hey there this is kind of of off topic but I was wanting to know if blogs use WYSIWYG editors or if you have to manually code with HTML. I’m starting a blog soon but have no coding know-how so I wanted to get guidance from someone with experience. Any help would be greatly appreciated!

I have been browsing on-line greater than three hours these days, but I never found any interesting article like yours. It is beautiful value sufficient for me. In my view, if all webmasters and bloggers made excellent content material as you did, the net might be a lot more useful than ever before. “Oh, that way madness lies let me shun that.” by William Shakespeare.

Having read this I thought it was very informative. I appreciate you taking the time and effort to put this article together. I once again find myself spending way to much time both reading and commenting. But so what, it was still worth it!

Fantastic blog! Do you have any suggestions for aspiring writers? I’m planning to start my own site soon but I’m a little lost on everything. Would you propose starting with a free platform like WordPress or go for a paid option? There are so many choices out there that I’m totally confused .. Any recommendations? Cheers!

What is Leanbiome? LeanBiome is a dietary supplement that is formulated with nine critically-researched lean bacteria species.

Java Burn is the world’s first and only 100 safe and proprietary formula designed to boost the speed and efficiency of your metabolism by mixing with the natural ingredients in coffee.

Good blog! I truly love how it is simple on my eyes and the data are well written. I am wondering how I could be notified when a new post has been made. I have subscribed to your RSS which must do the trick! Have a great day!

Some genuinely great posts on this website , thankyou for contribution.

Hello, i think that i saw you visited my blog thus i came to “return the favor”.I’m trying to find things to improve my site!I suppose its ok to use some of your ideas!!

I am constantly searching online for tips that can benefit me. Thanks!

It’s really a cool and helpful piece of information. I’m happy that you simply shared this useful info with us. Please keep us up to date like this. Thank you for sharing.

whoah this blog is magnificent i love reading your posts. Keep up the good work! You know, many people are hunting around for this information, you can help them greatly.

I wanted to create you that very small observation so as to say thank you yet again for the awesome opinions you’ve documented on this page. This is really extremely generous with you giving easily what a lot of people could have offered as an electronic book to generate some money on their own, certainly given that you could have done it if you ever wanted. The inspiring ideas in addition acted like a fantastic way to know that the rest have the identical eagerness really like mine to find out lots more with regard to this condition. I know there are some more enjoyable moments ahead for those who read through your website.

Thanks so much for giving everyone an extremely superb opportunity to read articles and blog posts from this website. It can be very excellent and as well , packed with a good time for me personally and my office co-workers to search the blog no less than three times per week to find out the new stuff you will have. And definitely, I’m always impressed considering the outstanding ideas you serve. Selected 2 ideas in this posting are undoubtedly the simplest I’ve ever had.

What Is Neotonics? Neotonics is a skin and gut health supplement that will help with improving your gut microbiome to achieve better skin and gut health.

Your style is so unique compared to many other people. Thank you for publishing when you have the opportunity,Guess I will just make this bookmarked.2

I envy your work, thankyou for all the interesting articles.

I do not even know how I ended up here, but I thought this post was good. I do not know who you are but definitely you’re going to a famous blogger if you aren’t already 😉 Cheers!

Hiya, I’m really glad I’ve found this information. Today bloggers publish just about gossips and web and this is actually annoying. A good website with exciting content, that’s what I need. Thank you for keeping this web site, I’ll be visiting it. Do you do newsletters? Cant find it.

I wanted to post you the very little note just to give many thanks the moment again just for the extraordinary strategies you’ve documented here. It was really surprisingly generous with you to make unhampered all most people might have sold as an ebook in order to make some money on their own, and in particular seeing that you could possibly have done it if you decided. The inspiring ideas additionally acted like the fantastic way to know that some people have the same dream much like my own to realize more and more on the topic of this matter. I am sure there are lots of more pleasant opportunities ahead for folks who examine your site. phenq

I wanted to write you that little remark to help thank you so much as before just for the amazing principles you have provided at this time. It has been quite surprisingly generous of people like you to deliver freely what exactly some people could have offered for sale for an ebook in order to make some cash on their own, principally since you might have tried it if you ever decided. The principles as well worked as the great way to be certain that other people online have the same passion like my very own to figure out significantly more with respect to this problem. I think there are numerous more fun times in the future for folks who check out your blog. lottery defeater reviews

I intended to draft you that very little remark in order to say thanks once again with your stunning opinions you have documented at this time. It was seriously open-handed of people like you to deliver publicly what a lot of people could possibly have offered for sale as an e book to end up making some bucks for their own end, and in particular since you could possibly have tried it if you ever wanted. Those thoughts also served like the great way to know that many people have the identical eagerness similar to my personal own to know the truth good deal more concerning this condition. Certainly there are several more enjoyable sessions in the future for people who read your site. the growth matrix

I needed to draft you this little observation in order to give many thanks the moment again for these remarkable knowledge you’ve discussed here. It has been quite incredibly open-handed with you to convey without restraint just what a few individuals could have distributed for an ebook to get some money for themselves, chiefly considering that you could possibly have done it in case you wanted. These solutions also acted to be the easy way to understand that other people have a similar dreams just like my own to know the truth good deal more with respect to this matter. I believe there are some more fun situations up front for individuals who examine your blog post. phenq

Needed to create you a very little word to finally give thanks yet again for these superb secrets you have shared in this article. This is really tremendously generous with people like you to grant openly precisely what many individuals could have marketed for an e-book to end up making some bucks for their own end, and in particular considering that you could possibly have tried it in case you decided. Those principles also acted to be a good way to know that some people have a similar passion just like my very own to grasp many more in regard to this problem. I’m sure there are thousands of more pleasant times ahead for those who examine your site. denticore reviews

Needed to put you this little word to say thanks as before considering the gorgeous methods you’ve documented here. This is surprisingly generous of you to provide freely all that most people would have advertised as an e book to help with making some cash on their own, primarily since you might have done it in the event you decided. Those ideas additionally served to become easy way to understand that other individuals have the identical dream just like mine to realize a little more regarding this problem. I am sure there are millions of more pleasurable moments in the future for many who find out your blog. boostaro reviews

Needed to send you one little observation to help say thanks over again for your exceptional methods you have documented on this site. This has been simply surprisingly generous with you to make easily exactly what most of us would’ve advertised for an electronic book to earn some profit for themselves, certainly considering the fact that you might have done it in case you desired. Those creative ideas also worked to be a fantastic way to fully grasp that other people have the same desire similar to my own to find out a little more in terms of this issue. I believe there are some more fun instances ahead for those who scan through your website. red boost reviews

I needed to compose you that little note to help give many thanks the moment again for these lovely tactics you have contributed here. It was so shockingly generous of people like you to offer freely what a lot of folks could have sold as an electronic book to make some cash for their own end, especially considering the fact that you could possibly have done it if you desired. Those tactics in addition served to become a good way to recognize that other individuals have a similar dream just like my personal own to find out a lot more regarding this matter. Certainly there are numerous more fun periods in the future for individuals who start reading your site. prodentim

Needed to post you the little observation just to thank you very much over again on the striking ideas you’ve contributed above. It was particularly open-handed of you to convey unreservedly precisely what some people might have offered for sale as an e book to earn some dough on their own, even more so since you could possibly have done it if you ever decided. The tips additionally served to provide a fantastic way to know that other people online have the identical desire really like my personal own to grasp way more concerning this issue. I think there are some more enjoyable sessions in the future for individuals that looked over your blog. emperors vigor tonic

I wanted to draft you this tiny remark to be able to thank you as before with your stunning suggestions you’ve provided above. It has been simply tremendously open-handed of you to provide unhampered just what some people might have advertised for an e book to help make some cash for their own end, mostly considering that you might well have tried it if you ever considered necessary. The concepts likewise served to be the fantastic way to be aware that other people online have the identical keenness just as my own to understand many more related to this problem. I’m certain there are millions of more fun instances up front for folks who read through your blog post. growth matrix

This actually answered my downside, thank you!

I used to be suggested this web site through my cousin. I am no longer positive whether or not this publish is written by way of him as nobody else understand such precise approximately my difficulty. You’re incredible! Thank you!

I just couldn’t leave your web site before suggesting that I extremely enjoyed the usual information a person provide in your visitors? Is gonna be again frequently to check out new posts

Thanks for the auspicious writeup. It if truth be told was a amusement account it. Glance advanced to far delivered agreeable from you! By the way, how could we keep up a correspondence?

Aw, this was a really nice post. In concept I would like to put in writing like this moreover – taking time and actual effort to make a very good article… but what can I say… I procrastinate alot and in no way appear to get something done.

I’m often to running a blog and i actually admire your content. The article has really peaks my interest. I am going to bookmark your site and preserve checking for brand new information.

What’s Happening i’m new to this, I stumbled upon this I have found It absolutely useful and it has aided me out loads. I hope to contribute & help other users like its aided me. Good job.

I am continuously invstigating online for posts that can assist me. Thank you!

I conceive this website contains some rattling wonderful info for everyone. “A kiss, is the physical transgression of the mental connection which has already taken place.” by Tanielle Naus.

Hey! This is kind of off topic but I need some help from an established blog. Is it very hard to set up your own blog? I’m not very techincal but I can figure things out pretty quick. I’m thinking about making my own but I’m not sure where to begin. Do you have any ideas or suggestions? Thanks

Really informative and superb body structure of subject material, now that’s user genial (:.

I gotta bookmark this internet site it seems invaluable handy

Only wanna tell that this is extremely helpful, Thanks for taking your time to write this.

You have brought up a very wonderful details, regards for the post.

Outstanding post, you have pointed out some excellent points, I too believe this s a very great website.

Great tremendous issues here. I?¦m very satisfied to see your article. Thank you so much and i am taking a look ahead to touch you. Will you please drop me a mail?

I as well as my pals appeared to be going through the nice techniques found on your web blog and so instantly I got an awful suspicion I never expressed respect to the web blog owner for those techniques. My guys are actually so stimulated to learn them and have truly been loving these things. Appreciate your truly being really kind and also for making a decision on this kind of great subject areas millions of individuals are really needing to learn about. Our honest regret for not expressing appreciation to you sooner.

I needed to compose you a very small remark to thank you so much the moment again for the breathtaking views you have provided on this site. It’s quite wonderfully open-handed of people like you to make openly all that many individuals would have offered as an electronic book to make some cash for their own end, principally given that you could possibly have done it in case you wanted. These strategies likewise worked to become fantastic way to fully grasp that other individuals have similar zeal like my own to figure out whole lot more when it comes to this condition. I think there are some more pleasant occasions ahead for individuals who examine your blog post. prostadine reviews

I intended to draft you that very little note to say thank you again regarding the pretty knowledge you’ve discussed in this article. It was really unbelievably open-handed with people like you to supply freely just what most people would have made available as an ebook in order to make some bucks for their own end, principally seeing that you could possibly have done it in case you considered necessary. Those secrets in addition served as the great way to realize that most people have a similar zeal much like my personal own to figure out very much more with respect to this condition. I think there are many more pleasurable opportunities up front for those who take a look at your site. java burn reviews

I got what you intend, appreciate it for putting up.Woh I am delighted to find this website through google.

I wanted to post you that little bit of word to help say thanks a lot as before regarding the pleasing methods you’ve featured at this time. It was extremely generous of people like you to deliver openly precisely what numerous people could have distributed as an e-book to get some profit on their own, notably considering the fact that you might have done it in case you decided. These creative ideas likewise worked to be the easy way to be aware that most people have the identical passion similar to my personal own to know somewhat more with regard to this condition. I believe there are numerous more enjoyable moments up front for individuals who looked over your blog post. boostaro reviews

I wanted to draft you a little bit of word just to say thanks once again on the stunning concepts you have provided in this article. It was generous with you to convey extensively what exactly most of us would’ve offered for an e-book in order to make some money for themselves, certainly considering the fact that you could possibly have tried it if you ever wanted. Those basics also served to become easy way to be aware that other individuals have similar dream the same as my very own to find out a good deal more with respect to this problem. I am sure there are thousands of more pleasurable times in the future for people who read your blog post. tonic greens

I intended to compose you the very little observation to be able to say thank you again for your gorgeous views you’ve featured here. It’s certainly shockingly generous of people like you to allow unreservedly precisely what many people could possibly have marketed as an ebook to end up making some bucks for themselves, and in particular now that you might well have tried it if you wanted. Those secrets as well served to provide a fantastic way to fully grasp most people have the same fervor like my very own to understand whole lot more in terms of this matter. I’m sure there are many more fun situations ahead for many who take a look at your site. tonic greens reviews

I wanted to write you this little remark so as to say thanks again with your pretty principles you have shown in this article. This has been quite particularly open-handed with you to provide openly what some people would’ve advertised as an e-book in making some cash for their own end, notably seeing that you could have done it in the event you considered necessary. Those pointers likewise worked as a easy way to be sure that most people have the identical passion like my very own to see very much more with respect to this matter. I believe there are lots of more fun periods in the future for individuals who take a look at your blog post. sight care reviews

I needed to post you a little bit of remark to be able to say thanks as before on the pleasing secrets you’ve documented in this article. It’s simply wonderfully open-handed of you to provide publicly exactly what a number of us could possibly have supplied as an e-book in order to make some bucks on their own, most notably considering the fact that you might well have done it if you considered necessary. Those inspiring ideas as well acted as a great way to know that other people have similar eagerness really like mine to understand a great deal more on the subject of this issue. I’m certain there are a lot more pleasurable sessions ahead for those who look into your site. nitric boost

I wanted to compose you one little bit of observation in order to thank you very much over again just for the marvelous tips you have discussed on this website. It is certainly unbelievably open-handed with people like you to allow easily just what a number of people would have offered for sale for an ebook in order to make some dough for their own end, even more so seeing that you might have tried it in case you desired. Those tactics also acted to be a fantastic way to recognize that most people have a similar zeal just as my personal own to know the truth a little more regarding this matter. Certainly there are millions of more fun periods in the future for those who scan through your blog post. alpha bites reviews

I needed to draft you a tiny note in order to say thank you as before about the nice knowledge you have shown above. It is simply pretty open-handed of you to supply unhampered just what a number of us would have made available for an e book to make some profit for themselves, most importantly now that you might well have tried it if you ever desired. The concepts also worked as the fantastic way to be certain that other people have similar passion just like my very own to figure out somewhat more in respect of this condition. I am certain there are thousands of more pleasant situations ahead for individuals that see your blog. provadent

Very nice info and straight to the point. I don’t know if this is actually the best place to ask but do you folks have any ideea where to hire some professional writers? Thank you 🙂

Hello There. I found your blog using msn. This is a really well written article. I will make sure to bookmark it and return to read more of your useful info. Thanks for the post. I will definitely comeback.

You are my breathing in, I own few web logs and sometimes run out from to post : (.

I needed to create you one little bit of observation in order to say thanks a lot over again relating to the pleasant information you’ve featured on this site. This is quite extremely open-handed of people like you to grant unhampered what exactly some people might have made available for an electronic book to make some dough on their own, specifically now that you might have done it if you ever considered necessary. Those strategies also served like the fantastic way to be aware that other individuals have a similar fervor really like mine to grasp a lot more concerning this problem. I know there are millions of more pleasurable times in the future for individuals that looked over your website. prodentim reviews

magnificent post, very informative. I wonder why the other specialists of this sector don’t notice this. You must continue your writing. I am sure, you’ve a great readers’ base already!

A person necessarily assist to make seriously articles I would state. This is the very first time I frequented your website page and to this point? I surprised with the analysis you made to create this particular post amazing. Excellent process!

I needed to draft you a little observation to finally say thank you again for those striking information you have contributed here. It was strangely open-handed with people like you to present easily what exactly most of us could possibly have offered as an e-book to make some cash on their own, even more so now that you might have tried it in the event you desired. Those good ideas likewise acted as a fantastic way to be sure that most people have the same passion really like mine to see significantly more on the topic of this problem. I am sure there are millions of more enjoyable situations up front for people who scan through your blog. tonic greens reviews

Magnificent goods from you, man. I have consider your stuff prior to and you’re simply extremely excellent. I actually like what you have bought here, really like what you’re stating and the way through which you say it. You are making it entertaining and you still take care of to stay it wise. I cant wait to learn much more from you. That is really a great site.

Together with every little thing that appears to be developing throughout this area, many of your opinions are generally rather radical. Having said that, I am sorry, because I do not subscribe to your entire theory, all be it refreshing none the less. It appears to me that your remarks are actually not entirely justified and in simple fact you are generally yourself not thoroughly confident of the assertion. In any event I did take pleasure in looking at it.

It’s hard to find knowledgeable people on this topic, but you sound like you know what you’re talking about! Thanks