Try Adsterra Earnings, it’s 100% Authentic to make money more and more.

Tested and Compatible with OpenAI ChatGPT, Azure OpenAI Service, Perplexity AI and Llama!

ChatGPT CLI provides a powerful command-line interface for seamless interaction with ChatGPT models via OpenAI and Azure, featuring streaming capabilities and extensive configuration options.

- Streaming mode: Real-time interaction with the GPT model.

- Query mode: Single input-output interactions with the GPT model.

- Interactive mode: The interactive mode allows for a more conversational experience with the model. Prints the token usage when combined with query mode.

- Thread-based context management: Enjoy seamless conversations with the GPT model with individualized context for each thread, much like your experience on the OpenAI website. Each unique thread has its own history, ensuring relevant and coherent responses across different chat instances.

- Sliding window history: To stay within token limits, the chat history automatically trims while still preserving the necessary context. The size of this window can be adjusted through the context-window setting.

- Custom context from any source: You can provide the GPT model with a custom context during conversation. This context can be piped in from any source, such as local files, standard input, or even another program. This flexibility allows the model to adapt to a wide range of conversational scenarios.

- Model listing: Access a list of available models using the -l or –list-models flag.

- Thread listing: Display a list of active threads using the –list-threads flag.

- Advanced configuration options: The CLI supports a layered configuration system where settings can be specified through default values, a config.yaml file, and environment variables. For quick adjustments, various –set- flags are provided. To verify your current settings, use the –config or -c flag.

- Availability Note: This CLI supports gpt-4, gpt-3.5-turbo, and Perplexity’s models (e.g., llama-3.1-sonar-small-128k-online). However, the specific ChatGPT model used on chat.openai.com may not be available via the OpenAI API.

We’re excited to introduce support for prompt files with the –prompt flag in version 1.7.1! This feature allows you to provide a rich and detailed context for your conversations directly from a file.

The –prompt flag lets you specify a file containing the initial context or instructions for your ChatGPT conversation. This is especially useful when you have detailed instructions or context that you want to reuse across different conversations.

To use the –prompt flag, pass the path of your prompt file like this:

chatgpt –prompt path/to/your/prompt.md “Use a pipe or provide a query here”

The contents of prompt.md will be read and used as the initial context for the conversation, while the query you provide directly will serve as the specific question or task you want to address.

Here’s a fun example where you can use the output of a git diff command as a prompt:

git diff | chatgpt –prompt ../prompts/write_pull-request.md

In this example, the content from the write_pull-request.md prompt file is used to guide the model’s response based on the diff data from git diff.

For a variety of ready-to-use prompts, check out this awesome prompts repository. These can serve as great starting points or inspiration for your own custom prompts!

You can install chatgpt-cli using Homebrew:

brew tap kardolus/chatgpt-cli && brew install chatgpt-cli

For a quick and easy installation without compiling, you can directly download the pre-built binary for your operating system and architecture:

curl -L -o chatgpt https://github.com/kardolus/chatgpt-cli/releases/latest/download/chatgpt-darwin-arm64 && chmod +x chatgpt && sudo mv chatgpt /usr/local/bin/ curl -L -o chatgpt https://github.com/kardolus/chatgpt-cli/releases/latest/download/chatgpt-darwin-amd64 && chmod +x chatgpt && sudo mv chatgpt /usr/local/bin/ curl -L -o chatgpt https://github.com/kardolus/chatgpt-cli/releases/latest/download/chatgpt-linux-amd64 && chmod +x chatgpt && sudo mv chatgpt /usr/local/bin/ curl -L -o chatgpt https://github.com/kardolus/chatgpt-cli/releases/latest/download/chatgpt-linux-arm64 && chmod +x chatgpt && sudo mv chatgpt /usr/local/bin/ curl -L -o chatgpt https://github.com/kardolus/chatgpt-cli/releases/latest/download/chatgpt-linux-386 && chmod +x chatgpt && sudo mv chatgpt /usr/local/bin/ curl -L -o chatgpt https://github.com/kardolus/chatgpt-cli/releases/latest/download/chatgpt-freebsd-amd64 && chmod +x chatgpt && sudo mv chatgpt /usr/local/bin/ curl -L -o chatgpt https://github.com/kardolus/chatgpt-cli/releases/latest/download/chatgpt-freebsd-arm64 && chmod +x chatgpt && sudo mv chatgpt /usr/local/bin/

Download the binary from this link and add it to your PATH.

Choose the appropriate command for your system, which will download the binary, make it executable, and move it to your /usr/local/bin directory (or %PATH% on Windows) for easy access.

-

Set the OPENAI_API_KEY environment variable to your ChatGPT secret key. To set the environment variable, you can add the following line to your shell profile (e.g., ~/.bashrc, ~/.zshrc, or ~/.bash_profile), replacing your_api_key with your actual key:

export OPENAI_API_KEY=”your_api_key”

-

To enable history tracking across CLI calls, create a ~/.chatgpt-cli directory using the command:

Once this directory is in place, the CLI automatically manages the message history for each “thread” you converse with. The history operates like a sliding window, maintaining context up to a configurable token maximum. This ensures a balance between maintaining conversation context and achieving optimal performance.

By default, if a specific thread is not provided by the user, the CLI uses the default thread and stores the history at ~/.chatgpt-cli/history/default.json. You can find more details about how to configure the thread parameter in the Configuration section of this document.

-

Try it out:

chatgpt what is the capital of the Netherlands

-

To start interactive mode, use the -i or –interactive flag:

If you want the CLI to automatically create a new thread for each session, ensure that the auto_create_new_thread configuration variable is set to true. This will create a unique thread identifier for each interactive session.

-

To use the pipe feature, create a text file containing some context. For example, create a file named context.txt with the following content:

Kya is a playful dog who loves swimming and playing fetch.

Then, use the pipe feature to provide this context to ChatGPT:

cat context.txt | chatgpt “What kind of toy would Kya enjoy?”

-

To list all available models, use the -l or –list-models flag:

-

For more options, see:

The ChatGPT CLI adopts a four-tier configuration strategy, with different levels of precedence assigned to flags, environment variables, a config.yaml file, and default values, in that respective order:

- Flags: Command-line flags have the highest precedence. Any value provided through a flag will override other configurations.

- Environment Variables: If a setting is not specified by a flag, the corresponding environment variable (prefixed with the name field from the config) will be checked.

- Config file (config.yaml): If neither a flag nor an environment variable is set, the value from the config.yaml file will be used.

- Default Values: If no value is specified through flags, config.yaml, or environment variables, the CLI will fall back to its built-in default values.

| Variable | Description | Default |

|---|---|---|

| name | The prefix for environment variable overrides. | ‘openai’ |

| thread | The name of the current chat thread. Each unique thread name has its own context. | ‘default’ |

| omit_history | If true, the chat history will not be used to provide context for the GPT model. | false |

| command_prompt | The command prompt in interactive mode. Should be single-quoted. | ‘[%datetime] [Q%counter]’ |

| output_prompt | The output prompt in interactive mode. Should be single-quoted. | ” |

| auto_create_new_thread | If set to true, a new thread with a unique identifier (e.g., int_a1b2) will be created for each interactive session. If false, the CLI will use the thread specified by the thread parameter. | false |

| track_token_usage | If set to true, displays the total token usage after each query in –query mode, helping you monitor API usage. | false |

| debug | If set to true, prints the raw request and response data during API calls, useful for debugging. | false |

| skip_tls_verify | If set to true, skips TLS certificate verification, allowing insecure HTTPS requests. | false |

| multiline | If set to true, enables multiline input mode in interactive sessions. | false |

LLM-Specific Configuration

| Variable | Description | Default |

|---|---|---|

| api_key | Your API key. | (none for security) |

| model | The GPT model used by the application. | ‘gpt-3.5-turbo’ |

| max_tokens | The maximum number of tokens that can be used in a single API call. | 4096 |

| context_window | The memory limit for how much of the conversation can be remembered at one time. | 8192 |

| role | The system role | ‘You are a helpful assistant.’ |

| temperature | What sampling temperature to use, between 0 and 2. Higher values make the output more random; lower values make it more focused and deterministic. | 1.0 |

| frequency_penalty | Number between -2.0 and 2.0. Positive values penalize new tokens based on their existing frequency in the text so far. | 0.0 |

| top_p | An alternative to sampling with temperature, called nucleus sampling, where the model considers the results of the tokens with top_p probability mass. | 1.0 |

| presence_penalty | Number between -2.0 and 2.0. Positive values penalize new tokens based on whether they appear in the text so far. | 0.0 |

| seed | Sets the seed for deterministic sampling (Beta). Repeated requests with the same seed and parameters aim to return the same result. | 0 |

| url | The base URL for the OpenAI API. | ‘https://api.openai.com‘ |

| completions_path | The API endpoint for completions. | ‘/v1/chat/completions’ |

| models_path | The API endpoint for accessing model information. | ‘/v1/models’ |

| auth_header | The header used for authorization in API requests. | ‘Authorization’ |

| auth_token_prefix | The prefix to be added before the token in the auth_header. | ‘Bearer ‘ |

Custom Config and Data Directory

By default, ChatGPT CLI stores configuration and history files in the ~/.chatgpt-cli directory. However, you can easily override these locations by setting environment variables, allowing you to store configuration and history in custom directories.

| Environment Variable | Description | Default Location |

|---|---|---|

| OPENAI_CONFIG_HOME | Overrides the default config directory path. | ~/.chatgpt-cli |

| OPENAI_DATA_HOME | Overrides the default data directory path. | ~/.chatgpt-cli/history |

Example for Custom Directories

To change the default configuration or data directories, set the appropriate environment variables:

export OPENAI_CONFIG_HOME=”/custom/config/path” export OPENAI_DATA_HOME=”/custom/data/path”

If these environment variables are not set, the application defaults to ~/.chatgpt-cli for configuration files and ~ /.chatgpt-cli/history for history.

Variables for interactive mode:

- %date: The current date in the format YYYY-MM-DD.

- %time: The current time in the format HH:MM:SS.

- %datetime: The current date and time in the format YYYY-MM-DD HH:MM:SS.

- %counter: The total number of queries in the current session.

- %usage: The usage in total tokens used (only works in query mode).

The defaults can be overridden by providing your own values in the user configuration file. The structure of this file mirrors that of the default configuration. For instance, to override the model and max_tokens parameters, your file might look like this:

model: gpt-3.5-turbo-16k max_tokens: 4096

This alters the model to gpt-3.5-turbo-16k and adjusts max_tokens to 4096. All other options, such as url , completions_path, and models_path, can similarly be modified. If the user configuration file cannot be accessed or is missing, the application will resort to the default configuration.

Another way to adjust values without manually editing the configuration file is by using environment variables. The name attribute forms the prefix for these variables. As an example, the model can be modified using the OPENAI_MODEL environment variable. Similarly, to disable history during the execution of a command, use:

OPENAI_OMIT_HISTORY=true chatgpt what is the capital of Denmark?

This approach is especially beneficial for temporary changes or for testing varying configurations.

Moreover, you can use the –config or -c flag to view the present configuration. This handy feature allows users to swiftly verify their current settings without the need to manually inspect the configuration files.

Executing this command will display the active configuration, including any overrides instituted by environment variables or the user configuration file.

To facilitate convenient adjustments, the ChatGPT CLI provides flags for swiftly modifying the model, thread , context-window and max_tokens parameters in your user configured config.yaml. These flags are –set-model , –set-thread, –set-context-window and –set-max-tokens.

For instance, to update the model, use the following command:

chatgpt –set-model gpt-3.5-turbo-16k

This feature allows for rapid changes to key configuration parameters, optimizing your experience with the ChatGPT CLI.

For Azure, use a configuration similar to:

name: azure api_key: model: max_tokens: 4096 context_window: 8192 role: You are a helpful assistant. temperature: 1 top_p: 1 frequency_penalty: 0 presence_penalty: 0 thread: default omit_history: false url: https://.openai.azure.com completions_path: /openai/deployments//chat/completions?api-version= models_path: /v1/models auth_header: api-key auth_token_prefix: ” ” command_prompt: ‘[%datetime] [Q%counter]’ auto_create_new_thread: false track_token_usage: false debug: false

You can set the API key either in the config.yaml file as shown above or export it as an environment variable:

export AZURE_API_KEY=

For Perplexity, use a configuration similar to:

name: perplexity api_key: “” model: llama-3.1-sonar-small-128k-online max_tokens: 4096 context_window: 8192 role: Be precise and concise. temperature: 1 top_p: 1 frequency_penalty: 0 presence_penalty: 0 thread: test omit_history: false url: https://api.perplexity.ai completions_path: /chat/completions models_path: /models auth_header: Authorization auth_token_prefix: ‘Bearer ‘ command_prompt: ‘[%datetime] [Q%counter] [%usage]’ auto_create_new_thread: true track_token_usage: true debug: false

You can set the API key either in the config.yaml file as shown above or export it as an environment variable:

export PERPLEXITY_API_KEY=

You can set the API key either in the config.yaml file as shown above or export it as an environment variable:

export AZURE_API_KEY=

Command-Line Autocompletion

Enhance your CLI experience with our new autocompletion feature for command flags!

Autocompletion is currently supported for the following shells: Bash, Zsh, Fish, and PowerShell. To activate flag completion in your current shell session, execute the appropriate command based on your shell:

- Bash . <(chatgpt –set-completions bash)

- Zsh . <(chatgpt –set-completions zsh)

- Fish chatgpt –set-completions fish | source

- PowerShell chatgpt –set-completions powershell | Out-String | Invoke-Expression

Persistent Autocompletion

For added convenience, you can make autocompletion persist across all new shell sessions by adding the appropriate sourcing command to your shell’s startup file. Here are the files typically used for each shell:

- Bash: Add to .bashrc or .bash_profile

- Zsh: Add to .zshrc

- Fish: Add to config.fish

- PowerShell: Add to your PowerShell profile script

For example, for Bash, you would add the following line to your .bashrc file:

. <(chatgpt –set-completions bash)

This ensures that command flag autocompletion is enabled automatically every time you open a new terminal window.

You can render markdown in real-time using the mdrender.sh script, located here. You’ll first need to install glow.

Example:

chatgpt write a hello world program in Java | ./scripts/mdrender.sh

To start developing, set the OPENAI_API_KEY environment variable to your ChatGPT secret key.

The Makefile simplifies development tasks by providing several targets for testing, building, and deployment.

- all-tests: Run all tests, including linting, formatting, and go mod tidy.

- binaries: Build binaries for multiple platforms.

- shipit: Run the release process, create binaries, and generate release notes.

- updatedeps: Update dependencies and commit any changes.

For more available commands, use:

-

After a successful build, test the application with the following command:

./bin/chatgpt what type of dog is a Jack Russel?

-

As mentioned previously, the ChatGPT CLI supports tracking conversation history across CLI calls. This feature creates a seamless and conversational experience with the GPT model, as the history is utilized as context in subsequent interactions.

To enable this feature, you need to create a ~/.chatgpt-cli directory using the command:

Reporting Issues and Contributing

If you encounter any issues or have suggestions for improvements, please submit an issue on GitHub. We appreciate your feedback and contributions to help make this project better.

If for any reason you wish to uninstall the ChatGPT CLI application from your system, you can do so by following these steps:

If you installed the CLI using Homebrew you can do:

brew uninstall chatgpt-cli

And to remove the tap:

brew untap kardolus/chatgpt-cli

If you installed the binary directly, follow these steps:

-

Remove the binary:

sudo rm /usr/local/bin/chatgpt

-

Optionally, if you wish to remove the history tracking directory, you can also delete the ~/.chatgpt-cli directory:

-

Navigate to the location of the chatgpt binary in your system, which should be in your PATH.

-

Delete the chatgpt binary.

-

Optionally, if you wish to remove the history tracking, navigate to the ~/.chatgpt-cli directory (where ~ refers to your user’s home directory) and delete it.

Please note that the history tracking directory ~/.chatgpt-cli only contains conversation history and no personal data. If you have any concerns about this, please feel free to delete this directory during uninstallation.

Thank you for using ChatGPT CLI!

Published By

Latest entries

allPost2025.01.21Former border deputy reacts to Trump’s executive order on immigration

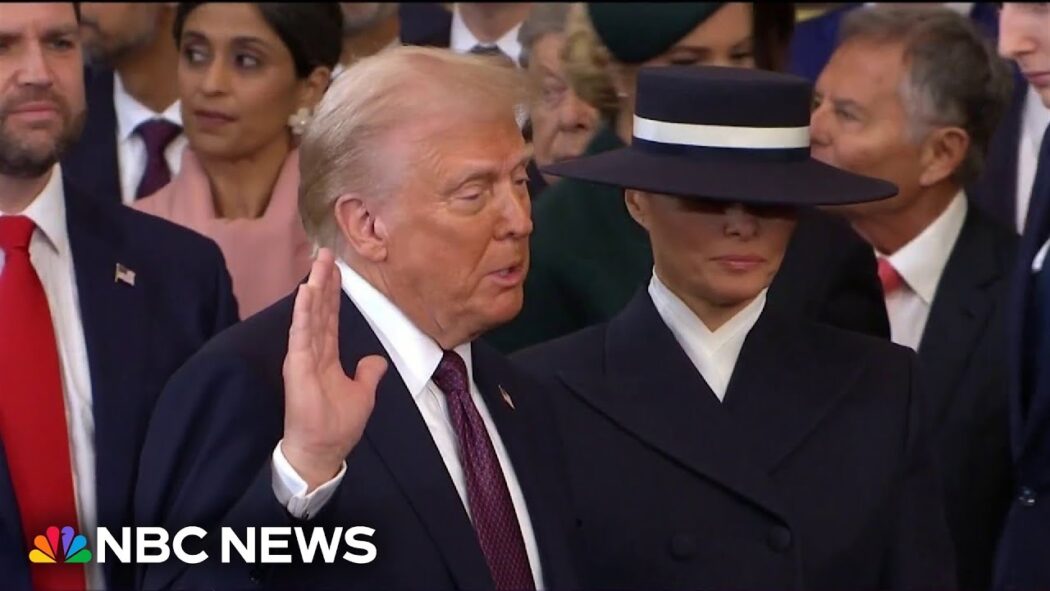

allPost2025.01.21Former border deputy reacts to Trump’s executive order on immigration allPost2025.01.21Trump becomes 47th president, capping remarkable political comeback

allPost2025.01.21Trump becomes 47th president, capping remarkable political comeback allPost2025.01.21Highlights from President Trump’s inauguration events at the U.S. Capitol

allPost2025.01.21Highlights from President Trump’s inauguration events at the U.S. Capitol allPost2025.01.21Trump targets immigration with executive orders

allPost2025.01.21Trump targets immigration with executive orders