Try Adsterra Earnings, it’s 100% Authentic to make money more and more.

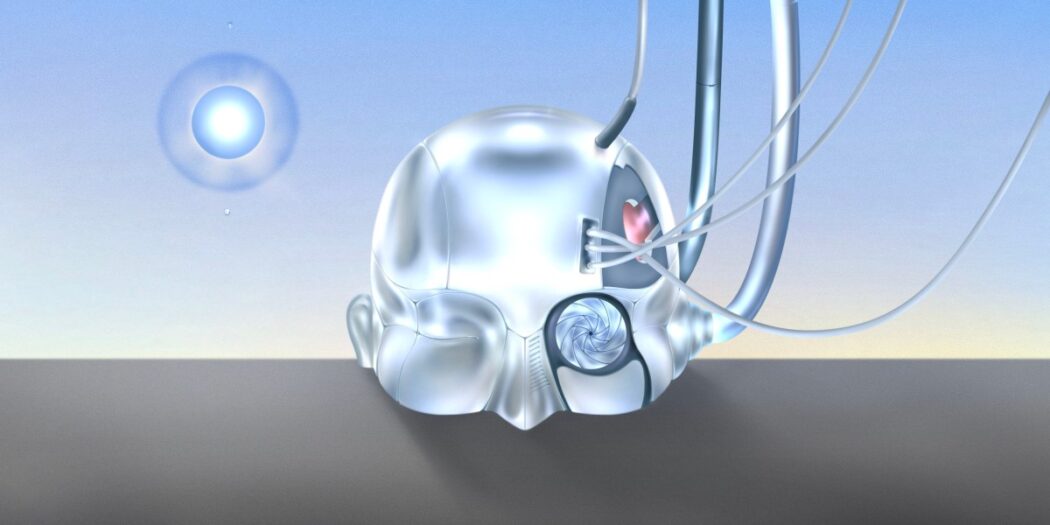

And the past year has seen a raft of projects in which AI has been trained on automatically generated data. Face-recognition systems are being trained with AI-generated faces, for example. AIs are also learning how to train each other. In one recent example, two robot arms worked together, with one arm learning to set tougher and tougher block-stacking challenges that trained the other to grip and grasp objects.

In fact, Clune wonders if human intuition about what kind of data an AI needs in order to learn may be off. For example, he and his colleagues have developed what he calls generative teaching networks, which learn what data they should generate to get the best results when training a model. In one experiment, he used one of these networks to adapt a data set of handwritten numbers that’s often used to train image-recognition algorithms. What it came up with looked very different from the original human-curated data set: hundreds of not-quite digits, such as the top half of the figure seven or what looked like two digits merged together. Some AI-generated examples were hard to decipher at all. Despite this, the AI-generated data still did a great job at training the handwriting recognition system to identify actual digits.

Don’t try to succeed

AI-generated data is still just a part of the puzzle. The long-term vision is to take all these techniques—and others not yet invented—and hand them over to an AI trainer that controls how artificial brains are wired, how they are trained, and what they are trained on. Even Clune is not clear on what such a future system would look like. Sometimes he talks about a kind of hyper-realistic simulated sandbox, where AIs can cut their teeth and skin their virtual knees. Something that complex is still years away. The closest thing yet is POET, the system Clune created with Uber’s Rui Wang and others.

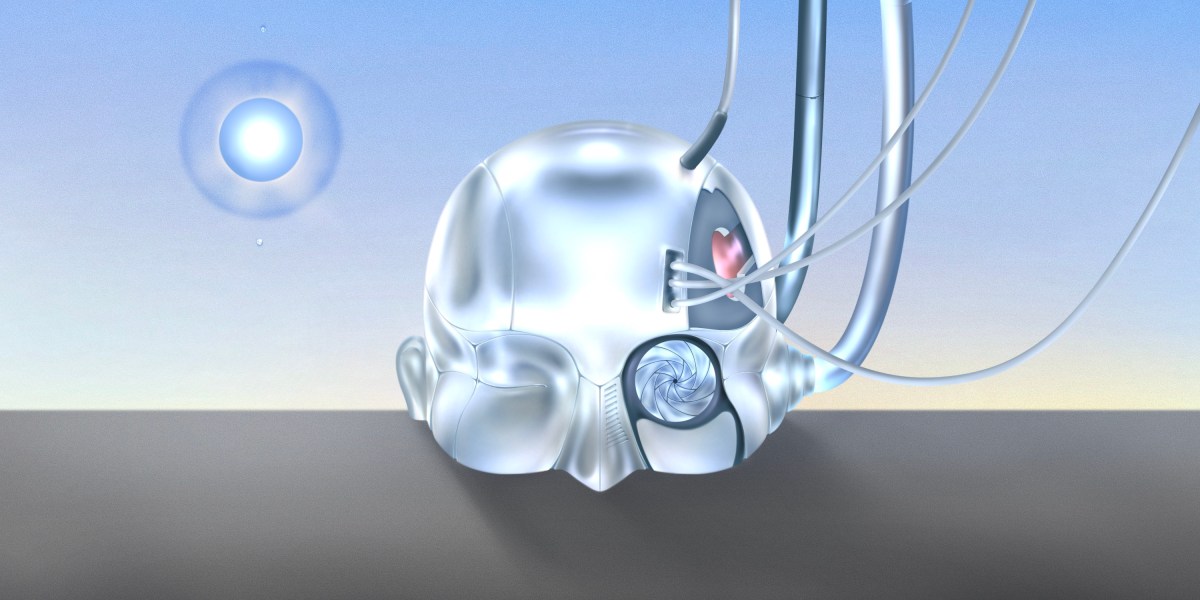

POET was motivated by a paradox, says Wang. If you try to solve a problem you’ll fail; if you don’t try to solve it you’re more likely to succeed. This is one of the insights Clune takes from his analogy with evolution—amazing results that emerge from an apparently random process often cannot be re-created by taking deliberate steps toward the same end. There’s no doubt that butterflies exist, but rewind to their single-celled precursors and try to create them from scratch by choosing each step from bacterium to bug, and you’d likely fail.

POET starts its two-legged agent off in a simple environment, such as a flat path without obstacles. At first the agent doesn’t know what to do with its legs and cannot walk. But through trial and error, the reinforcement-learning algorithm controlling it learns how to move along flat ground. POET then generates a new random environment that’s different, but not necessarily harder to move in. The agent tries walking there. If there are obstacles in this new environment, the agent learns how to get over or across those. Every time an agent succeeds or gets stuck, it is moved to a new environment. Over time, the agents learn a range of walking and jumping actions that let them navigate harder and harder obstacle courses.

The team found that random switching of environments was essential.

Published By

Latest entries

allPost2024.11.24Chinese New year Evoplay Evoplay Enjoyment Position Review & Demo November Costa Bingo casino bonus codes 2024

allPost2024.11.24Chinese New year Evoplay Evoplay Enjoyment Position Review & Demo November Costa Bingo casino bonus codes 2024 allPost2024.11.24Play Publication of Ra Luxury On line press this link here now Slot machines in the uk 2024

allPost2024.11.24Play Publication of Ra Luxury On line press this link here now Slot machines in the uk 2024 allPost2024.11.24Slots Sorte Casino: 20 Rodadas Acessível Sem Depósito Bônus Especial

allPost2024.11.24Slots Sorte Casino: 20 Rodadas Acessível Sem Depósito Bônus Especial allPost2024.11.24Blood Suckers Slot Play with 98% RTP and winnings more tips here up to £7,500

allPost2024.11.24Blood Suckers Slot Play with 98% RTP and winnings more tips here up to £7,500